How Will My Music Sound on Spotify?

Streaming loudness normalization and data compression can be a bit of a mystery. In this article, we investigate how Spotify performs these processes.

In 2021, the vast majority of listeners are consuming music through streaming services. And though they’ll forever have their fans, the days of LPs, Tapes, CDs, and even MP3 downloads being the standards for music consumption are waning. As audio engineers, it’s critical to understand what’s happening when we upload a final version of our songs to streaming services, since this is what most listeners are likely going to be hearing.

One of the biggest players in streaming, Spotify, just updated its information on loudness normalization and file formats, so we thought this would be a good time to go through the most important parts and break down what it means for us as music producers and audio engineers.

Note though—platforms like Spotify aren’t static; they evolve and standards are updated over time. It’s on us to stay ahead of the curve and stay informed.

A brisk history of loudness normalization

When Spotify first introduced loudness normalized playback to its platform, the purpose was to create a better listening experience for users (following in the footsteps of terrestrial radio and Apple’s iTunes). Rather than having to adjust the volume when shuffling between songs, Spotify would measure the perceptual loudness and adjust the volume for you. Nice and easy! But whether they knew it at the time or not, this accelerated awareness about loudness normalization among producers and engineers, increasingly influencing the sound of music production overall.

In the world of digital audio, there is a maximum sample peak level for a file that we refer to as 0 dBFS (decibels full-scale). If your levels exceed 0 dBFS when you bounce a file, it will be digitally clipped at a maximum of 0 dB (and probably sound pretty nasty). You can solve this with a limiter like the

Ozone Advanced

Historically, methods used to determine audio loudness were based entirely on amplitude measurements and didn't consider perceived loudness. In 2006, the International Telecommunications Union (ITU) introduced the first version of their “Algorithms to measure audio programme loudness and true-peak audio level.” This included LUFS (loudness units full-scale). One of the first applications was to create more consistency between the loudness of a TV show and the commercial break, turning down those super-loud commercials that would grab your attention back in the mid-2000s. Now, Spotify and other streaming services are using updated versions of the same algorithms to set the playback levels of each song and album.

In order to create the same consistency for music listeners, Spotify and some other streaming services “loudness normalize” songs, or set them to the same standard level using various systems including Integrated LUFS. In 2021, Spotify adopted a level standard of -14 Integrated LUFS. What does that mean practically for audio engineers? Using an application like

RX 11 Advanced

Recreating Spotify’s normalization in RX

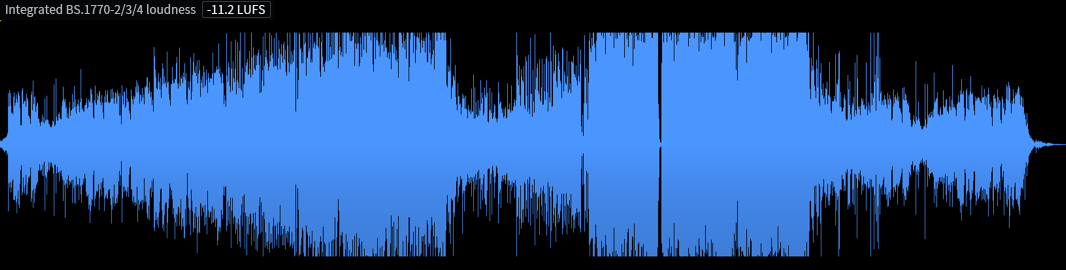

In the RX Waveform Statistics window, you can view the integrated loudness of an audio file. My song has an integrated loudness measurement of -11.2 LUFS. The convenient thing about loudness units is that they scale equally with dB. To normalize my song to -14 LUFS, all I need to do is turn the gain down by 2.8 dB:

Waveform and integrated loudness for Please, Go (before normalization)

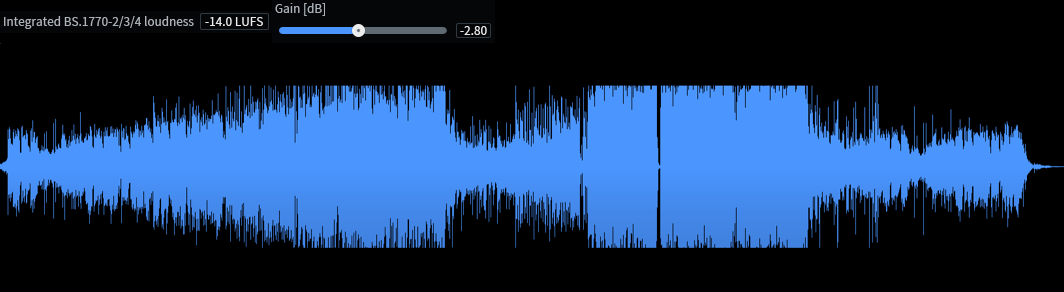

Waveform and integrated loudness for Please, Go (after normalization)

We can see that the song has been turned down, but that’s all that’s happening. It’s not like Spotify is using dynamic range compression on my song, just applying negative gain. This can be a really useful technique when mastering, because now that my song is at Spotify’s loudness levels I can do an A/B comparison with any other reference song on the platform!

For demonstration, I’ll show what would happen if I made a much louder master by lowering the Maximizer threshold by 3 dB:

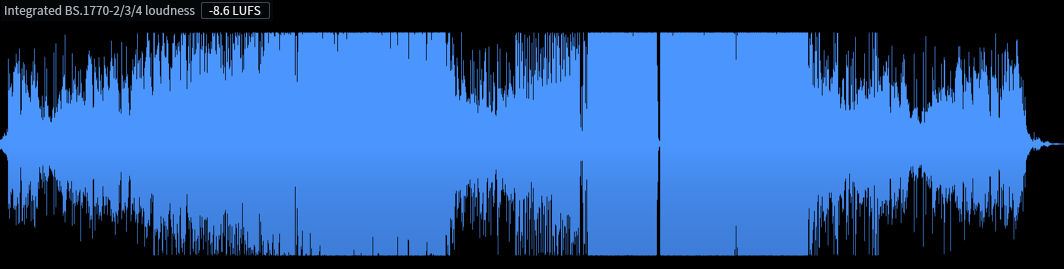

Waveform and integrated loudness for a more limited version of Please, Go (before normalization)

Without changing the peak level, I’ve increased the loudness of my master. The choruses now look more like rectangles without any edges poking out. Running the same simple math:

14 - 8.6 = 5.4

So Spotify will turn this new master down by 5.4 dB to normalize to -14 LUFS.

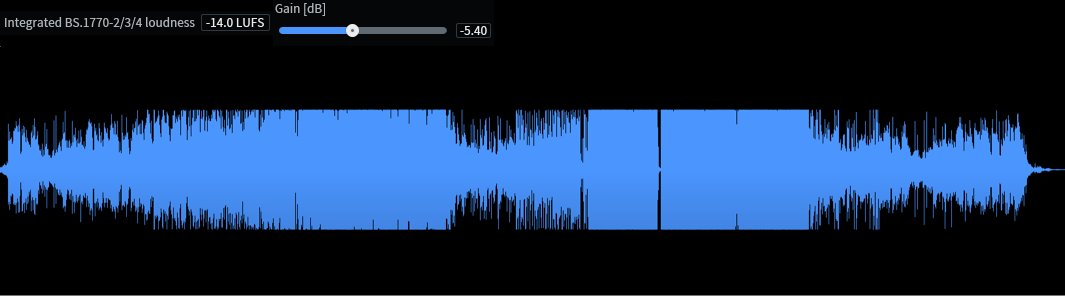

Waveform and integrated loudness for a more limited version of Please, Go (after normalization)

The really interesting thing is to zoom into the waveform and compare the hard-pushed, loud master with the original after they’ve both been normalized:

We can see that all we’ve done is chop off the peaks of the drums, adding distortion. In the sample-peak-normalized world of CDs, when tracks weren’t normalized according to their perceived loudness, it made sense to push the limiter hard. Now that we’re in the age of loudness-normalized streaming, the extent to which we push the limiter is more a factor of our creative expression than a technical requirement. Just pushing it for the sake of pushing it isn’t doing our song any favors on loudness-normalized platforms!

You can hear the two versions after normalization here:

Spotify recommends that you target -14 LUFS for your song. If you do this Spotify will not apply any increase or decrease in gain, and your song will play back on Spotify at the exact same amplitude level at which you bounced it.

We recommend that you experiment with different loudness levels, normalizing the tracks yourself with the procedure above, and then comparing them to reference songs on Spotify.

Data compression

It can be easy to get confused about “compression” and loudness normalization. We’re typically most familiar with dynamic range compression (think compressors and limiters), but the data compression that occurs in converting audio to different codecs is a bit different.

Codec

The most famous codec is MP3, but Spotify opts to use a free, open-source codec called OGG Vorbis. (This is made by an organization called Xiph, who also makes the FLAC codec.) Because OGG is a form of lossy data compression, it necessarily involves some dynamic range compression to perform this conversion. Data compression to a lossless codec, like FLAC, would not involve the same dynamic range compression.

Bit-rate

Depending on a user’s Streaming Quality setting in their Spotify preferences, they will get a different bit-rate. Very High quality gives you 320 kbps, High is 160 kbps, and Normal is 96 kbps. The higher the bit-rate, the less information is lost in the filtering. We audio engineers have no control over what quality setting a listener will use, but we can at least get a sense of how our song will sound. Using

RX 11 Advanced

A fun thing we can do is to perform a null-test. If we take one of the compressed files and invert the waveform, then play it back along with the original, the result will be what the data compression has removed from the audio:

Even though they sound strange, I really enjoy listening to these null tests. It’s fascinating to hear what information is being removed in order to make the file smaller. While there’s very little lost at the highest quality setting, there’s quite a bit of music being lost at normal quality. Still, when I listen to the Normal quality version, it doesn’t sound terrible to me.

Peak level

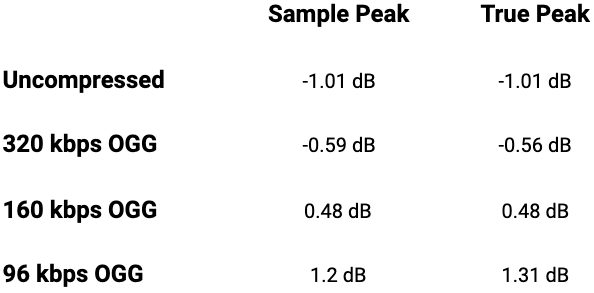

When a song gets encoded into OGG Vorbis (or any other lossy codec), its peak sample level will change (and almost always increase). You can see this if you export a WAV file into OGG using RX, import the OGG file, and check the peak levels in the Waveform Statistics view. For example, here are the sample peaks and true peaks for my song at the various bit rates we heard above:

We can see that after data compression, the peak levels of my song have increased. The levels increase with the loss in quality. This means my song will be digitally clipped at any quality setting other than Very High Quality. For this reason, Spotify recommends using a true peak limiter with a ceiling of no higher than -2 dB (see the bottom of the linked page).

Track not as loud as others?

There’s nothing worse than finally listening to your song on release day and thinking it doesn’t sound good or doesn’t sound as loud as whatever reference song you play next. Since all songs are normalized to the same loudness, there shouldn’t be any loudness differences. However, Spotify calls out certain problems could cause your song to have a higher LUFS value than it should:

Your track is just too crushed

As described in the first section, an extra push into the limiter may make your track excessively loud. If your song's waveform looks like a rectangle throughout, it may sound quiet compared to the loud sections of a more dynamically-mastered song.

Your playback system has an uneven or unreliable frequency response

It should be pretty obvious, but Spotify doesn’t know and can’t compensate for your personal monitoring system. If your playback system is nonlinear in some way, boosting or lacking certain frequencies, your perception of loudness in your listening environment may not consistently line up with the integrated LUFS value for your track.

Spotify uses this LUFS value to inform the resulting loudness normalization. So if your experience of hearing music on your system doesn't align with the value Spotify is using, your track may be normalized in a way you weren’t expecting.

Imagine you’ve mixed and mastered your whole song on cheap headphones with no high frequency extension. You can’t tell what’s going on up there, so maybe you’ve cranked the highs up way too loud. The loudness algorithm is still going to register those highs and may turn your track down excessively. The same could happen if your system was lacking in bass while you were mixing.

Or imagine your speakers have a big bump in the low mids. Any song you play with a similar frequency bump will seem louder because your speakers are boosting it. Obviously the loudness algorithm doesn’t know the frequency response of your speakers or headphones, so it can’t compensate for that.

A good sanity-check for this problem is to use a tool like

Tonal Balance Control 2

Edge cases

Everything I’ve described above is going to be true most of the time. However, for the sake of completeness, there are a couple of edge cases to explain:

Album playback

If you upload an album, Spotify will measure the integrated loudness of each song individually. When a song comes up in a playlist, Spotify will normalize it based on the individual song’s loudness. When you play an album with shuffle off, Spotify will instead apply the same normalization to each song so that the loudest song is -14 integrated LUFS. This means that songs intentionally made to be quiet or loud relative to the rest of the album will not lose their intended level.

Other codecs

Spotify uses OGG vorbis for its mobile and desktop apps, except for the Low quality setting which uses HE-AACv2 (24kbps). The web app uses a different codec—AAC.

Volume level setting

The user can choose to turn off normalization, though it is on by default for all users. There is also a setting to adjust the volume level for your environment, which will change the LUFS normalization level:

- Loud: -11dB LUFS

- Normal: -14dB LUFS

- Quiet: -23dB LUFS

If your song is quieter than -14 LUFS

Before now, we’ve only talked about how Spotify will turn your track down if it is louder than -14 LUFS. But if your track is quieter than -14 LUFS, Spotify will actually increase the gain. However, there’s a limit to this. Spotify will not increase your track past a true peak level of -1 dB. For example, if a track loudness level is -20 dB LUFS, and its True Peak maximum is -5 dB, Spotify only lifts the track up to -16 dB LUFS.

If the user has selected the “Loud” playback option, Spotify will use a limiter to raise the song to -11 LUFS, regardless of peak level. This is the only case where Spotify will apply dynamic range compression. (See the bottom of the “How we adjust loudness” section here.)

The takeaways

To take the mystery out of what will happen to a track, it can be incredibly helpful to create your own “streaming preview” using

RX 11 Advanced

Next, you may consider not pushing your limiter as hard. If streaming is your main platform, you now know how loudness normalization will affect your track. You may also consider following Spotify’s guidelines on peak level and leaving at least 2 dB true peak headroom for the encoding process. If you’re concerned that your playback system’s uneven frequency response might be hurting your loudness measurements, you may want to use a tool like

Tonal Balance Control 2

We hope this has been useful and informative, and helps you producers and engineers get the results you expect on Spotify!