Understanding Loudness in Audio Mastering

What is loudness? The answer may seem obvious, but let's explore the factors that influence our perception of loudness and how to apply them to audio mastering.

What is loudness? In an episode of iZotope’s Are You Listening? mastering video series, professional mastering engineer and iZotope’s Director of Education, Jonathan Wyner, covered some great ground on loudness in mastering. If you haven’t yet watched it, I highly recommend you start your journey to the heart of loudness there.

Today we want to dive a bit deeper into why that thing we call “loudness” is harder to define than you might think. In this piece you’ll learn:

Why answering the question, “What is loudness?” is harder than you think

How tonal balance and spectral density contribute to loudness

How loud “loud enough” is

It’s all in your head

No, seriously. It’s really all in your head. It’s easy to overlook this and think that there’s some objective thing called “loudness,” but it’s important to acknowledge that all hearing, including our perception of loudness, happens in our mind and in our ears—though the latter is kind of secondary. Here are two quick anecdotes to help illustrate this:

Different people hear sound in different ways

My wife and I frequently disagree on how loud something is, and not in as consistent a way as you might expect. For example, sometimes she finds something to be perfectly comfortable to listen to, while I think it’s blisteringly loud. Other times, she’ll experience the TV as distractingly loud while I’m focusing intently to make out dialogue and other details.

We’re both experiencing the same sound pressure level in the air, so what’s going on here? Well, this is a perfect illustration of the fact that your sense of hearing is not only affected by your sex and age (my wife is six years my junior), but also potentially by your current state of mind and level of alertness. Have you ever listened to something first thing in the morning at the same playback level as the night before only to find it uncomfortably loud? Those are the same kinds of factors at play.

Your hearing can change day-to-day

Here’s another, very different example:

Some time ago, I went through a period during which I experienced high levels of stress, and as a result, started clenching my jaw in my sleep. This caused muscle inflammation, which ultimately constricted my eustachian tube—and likely my cochlea to an extent—and one morning I woke up unable to hear above about 8 kHz in my right ear. As if that weren’t distressing enough, what happened next was doubly so, and truly bizarre.

Over the course of the morning, I started to notice that pitches in my right ear were about a half-step higher than in my left—a condition I later learned is called diplacusis dysharmonica. Even a pure, mono, tone sounded out of tune with itself. Fun, right? How’s that stress level, Stewart? It turns out this was my brain’s way of compensating for the sudden deficiency in high-frequency information: artificially pitch shift incoming sounds up to fill in the gap.

Thankfully, after identifying what was going on, I was able to take corrective action. My high-frequency hearing returned, and the left half of my brain stopped pretending it was a ring-modulator. On the whole, this experience forced me to come to terms with just how much hearing happens in your brain. If your ears are the microphones that turn acoustic energy into electrical signals, your brain is the signal processor that turns those signals into something we actually experience as sound. It can—and will—reprogram itself on the fly.

What factors into loudness perception?

Hopefully I’ve convinced you by now that even something as seemingly simple as loudness can be a very personal experience depending on how your brain interprets incoming signals. But given that, what are some factors that will cause most brains to interpret a sound as louder or softer than another sound? And how do those experiences relate to what we might call “loudness” in a digital audio context?

Pitch

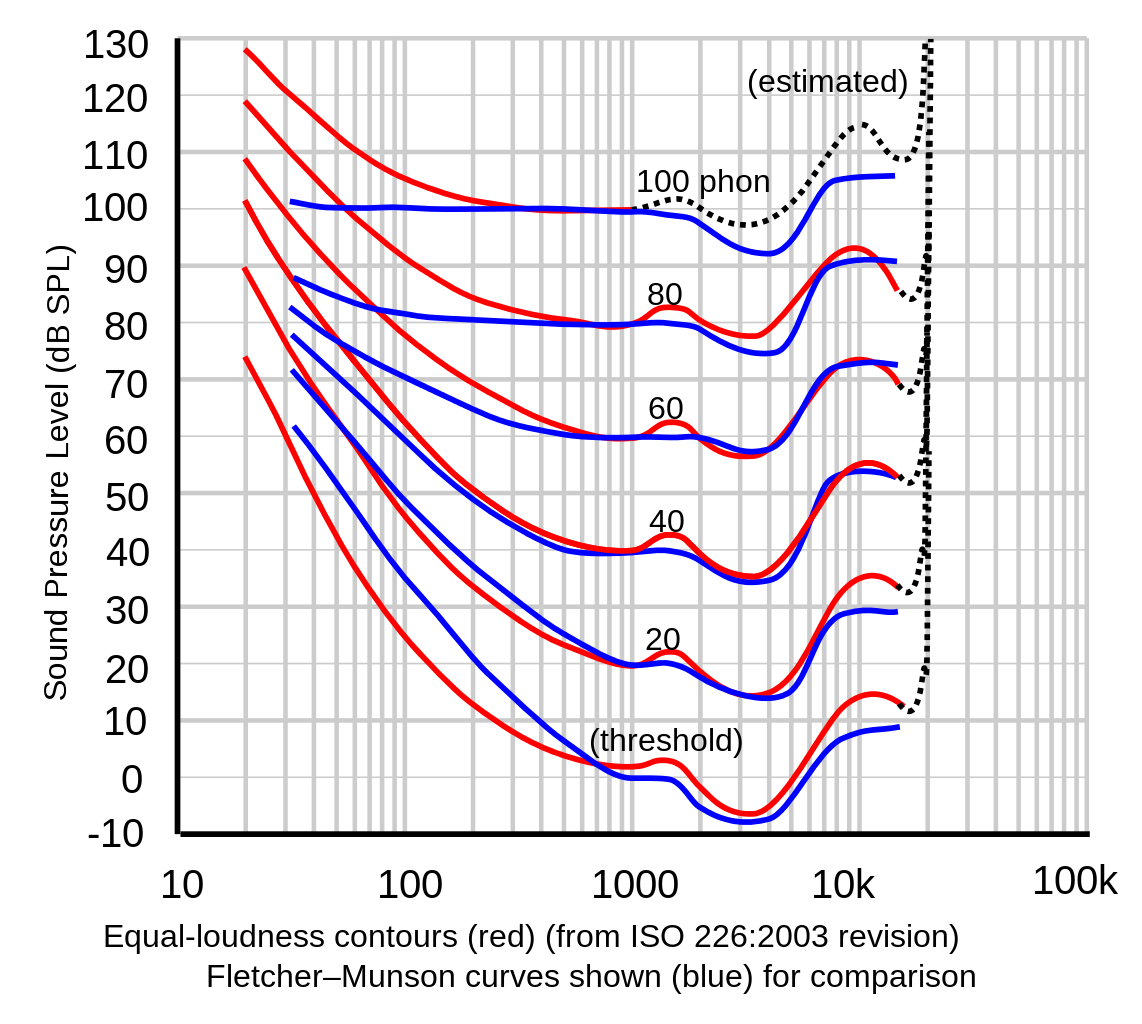

I’ve talked before about the equal loudness contours, but they’re certainly worth a mention in this context. In a nutshell, they point at the fact that our ear-brain system does not perceive all pitches as equally loud, given equal sound pressure levels. The relationship is complicated, personal, and non-linear—meaning the discrepancies change with both pitch and total overall SPL. But generally speaking, we perceive very low and very high pitches as softer than pitches in the midrange—especially those around 3–4 kHz—when played at an equal SPL.

Comparison of Fletcher-Munson and ISO 226:2003 equal loudness contours

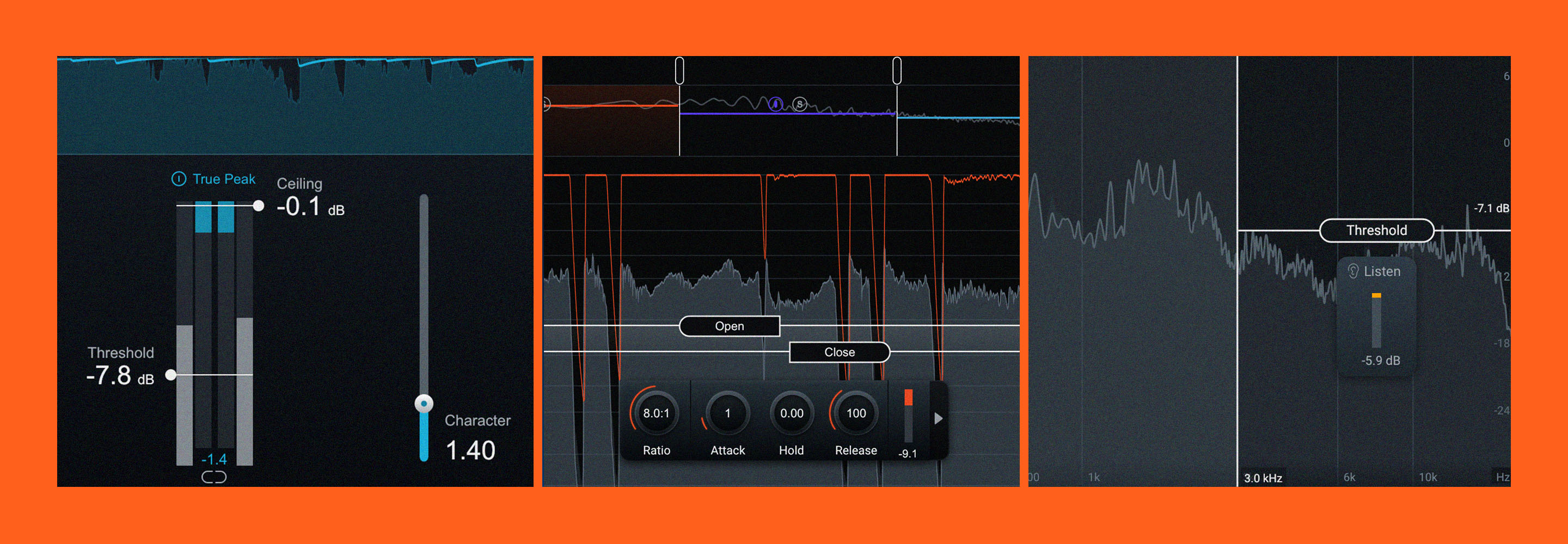

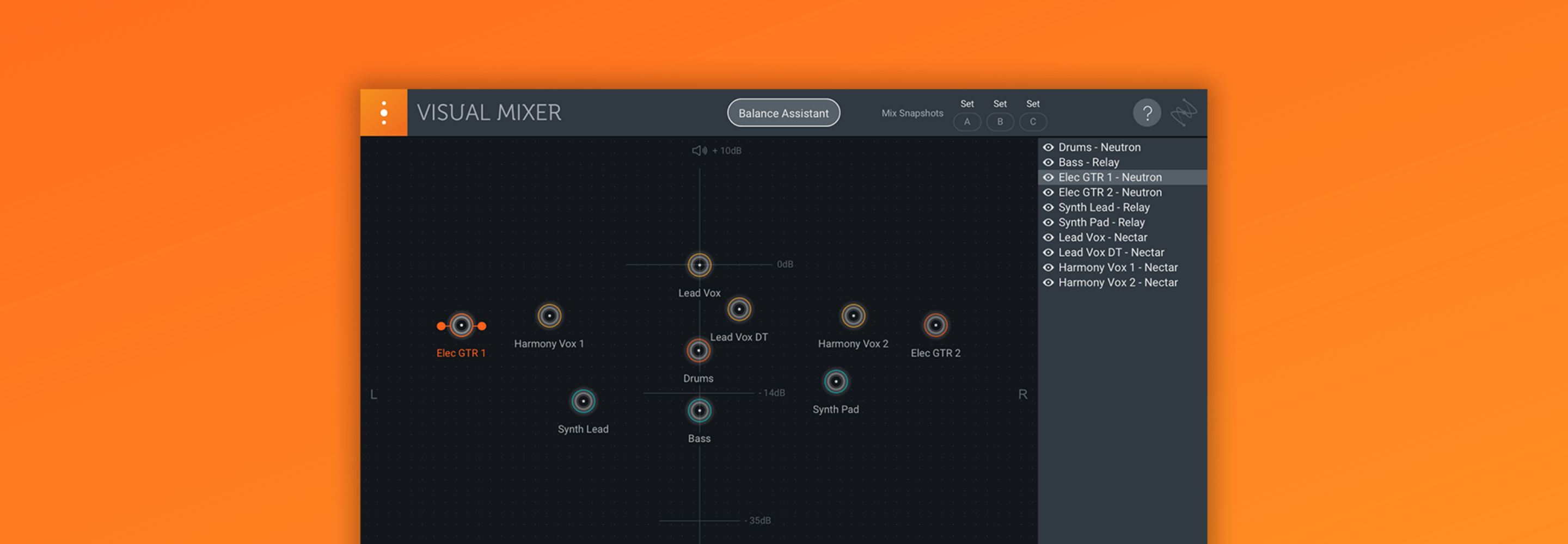

From a practical audio mastering standpoint, this means that tonal balance plays a crucial role in a song being perceived as loud, especially when compared to another song with a meter. LUFS meters like the ones in

Insight 2

Spectral density—and contrast

This one’s a little counter-intuitive. It might seem like it should be easier to make a big, dense arrangement with lots of instruments covering the entire frequency range sound louder than a solo instrument or voice, right? After all, an entire symphony orchestra undeniably sounds louder than just one flute. Well, not quite, at least not in the world of digital audio where you have a strictly defined maximum level.

Spectral density can be thought of as a continuum between a single, pure tone and broadband noise—let’s say pink noise for our purposes. Pink noise has maximum spectral density: all frequencies are represented equally. A pure tone, on the other hand, has minimum spectral density: it’s just a single frequency.

If we compare the peak and average levels of broadband noise and a 1 kHz tone, we’ll notice two rather interesting things:

1. If we match the average levels of the two signals, we’ll find that the noise has peak levels 10–11 dB higher than the tone. It’s difficult to make a perceptual comparison between such different signals, but you could say that they sound roughly the same loudness in the air when matched this way.

Tone and noise set to -20 dBFS RMS

2. If we play both signals together and reduce the level of the tone until we can just barely hear it over the noise, we find that its peak level is a full 36 dB below the peak level of the noise signal. Interestingly though, when viewed in a spectrum analyzer the tone is still roughly 6 dB above the level of the noise at 1 kHz.

Tone dropped 26 dB and blended with noise

Taken together, these facts point at something rather interesting: our ear-brain system is much more reliant on average level than peak level for loudness perception, and is able to distinguish tones at much lower absolute levels than a comparable noise signal. Additionally, as spectral density increases, so does the peak level in comparison to the average level. This means that we need additional peak headroom as spectral density increases, or, if it’s not available, that perceived loudness must necessarily decrease.

In-between the extremes of a pure tone and broadband noise, other interesting—and complex—psychoacoustic phenomena pop up. Of note is something known as “partial masking.” While the details of this phenomenon are the subject of ongoing research, the fundamental concept is that, within a bandlimited region, different types of sounds have a greater or lesser ability to partially obscure other background sounds.

In part, this is dependent on the harmonic content of the sound, and the phase relationship of the harmonics to the fundamental. At the end of the day though, this can help explain why, in some cases, certain combinations of sounds can increase the overall “objective”—or measured—level, without any increase in the subjective—or perceived—level.

In the experience of loudness, it’s also worth thinking about contrast and timbre. For example, it is generally easier to judge a song, or section of a song, as “loud” if it is surrounded by softer material. Many cues about loudness are also embedded in the timbre of a sound. To paraphrase Jonathan Wyner, “Whether your mother is yelling at you to ‘get up or you’ll be late to school’ from your bedroom or the kitchen, you can still tell she’s yelling—despite the difference in SPL.”

Conclusion

Hopefully you should now understand that there really is no one thing we can point to and call “loudness.” How we all experience loudness is both very personal and highly dependent on a large number of factors—and we didn’t even talk about consumer playback systems or volume controls! If you’re wondering what the point of all this is and how it really applies to audio mastering, I can distill it quite simply.

As creators, mixing, and mastering engineers, we really have very little control over how casual listeners will perceive the loudness of the music we work on. What we can control, at least to a greater degree, is how they perceive the overall quality—the punch, tonal balance, density, clarity, and spaciousness. To that end, the pursuit of additional level in hopes of increasing perceived playback loudness can be a fool’s errand.

If, during the course of mastering, you find yourself asking “Is my record loud enough?” or, “How loud should I be making my record for my distribution medium?”, our own Jonathan Wyner thinks you may be asking the wrong question. He suggests instead that you ask yourself “Can I make this louder and have it sound at least as good, if not even better, than it sounds now?” For every song, there’s a point where you reach the optimal balance of level and “goodness.” It’s your job to find it.

Good luck, and happy mastering!