What is Timbre in Music? Why is it Important?

Timbre in music helps us distinguish one instrument from the next. But how? We ran pure sound waves, instruments, and vocals through the spectrogram in Insight 2 to discover what makes them unique.

Whether we’re at a concert or listening over headphones, we rely on a series of musical factors to distinguish the instruments we’re hearing and whether they are electronic, acoustic, or human. Some of these are pretty straightforward, like pitch and loudness, while others are not as easily pinned down by mathematical equation or note names. I’m talking about timbre.

Timbre refers to the character, texture, and colour of a sound that defines it. It’s a catchall category for the features of sound that are not pitch, loudness, duration, or spatial location, and it helps us judge whether what we’re listening to is a piano, flute, or organ.

Since words can only do so much to describe timbre, in this article, we will rely on visual aid from Insight 2’s spectrogram—a tool that shows us what sound looks like—to get a better understanding of timbre, how it works, and how it can be used to improve the music we make.

What is timbre in music? Visualize it

Our perception of timbre relies on two key physical characteristics of sound: frequency spectrum and envelope. The spectrogram in Insight 2 visualizes these characteristics over time, which makes it easy to compare and contrast common timbres in music and learn what makes them different from one another.

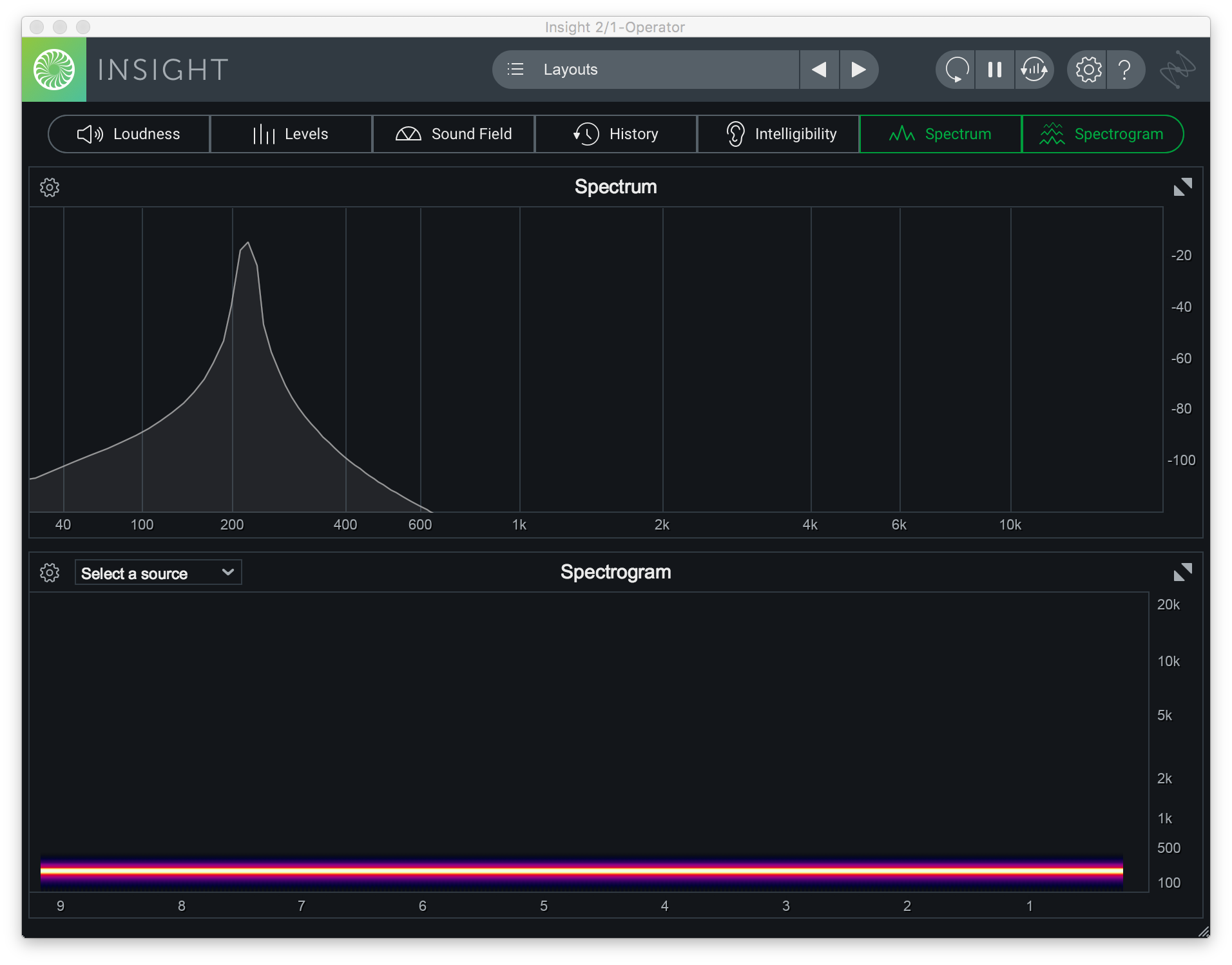

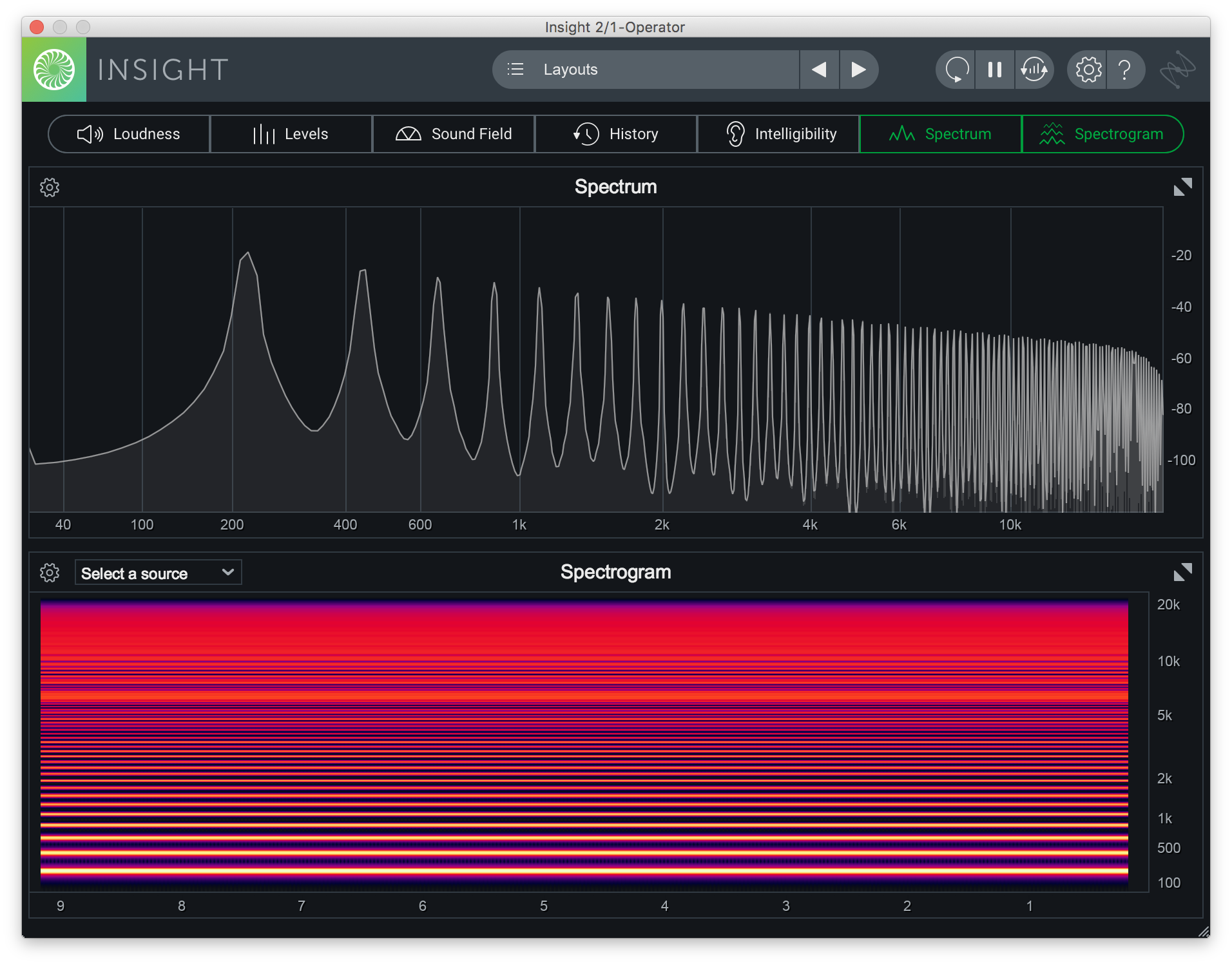

The spectrogram below is a visualization of the note A2, which vibrates at 220 Hz, triggering a single sine wave oscillator. A sine wave is the simplest waveform. It’s a basic and isolated sound with only a fundamental frequency—the most distinct frequency of a sound and what determines its pitch. The timbre is dull or pale as a result.

Side note—although sine waves don’t have any interesting timbre on their own, combining two or more together can create a more complex sound, which is the basis for additive synthesis.

Sine wave visualization in Insight 2

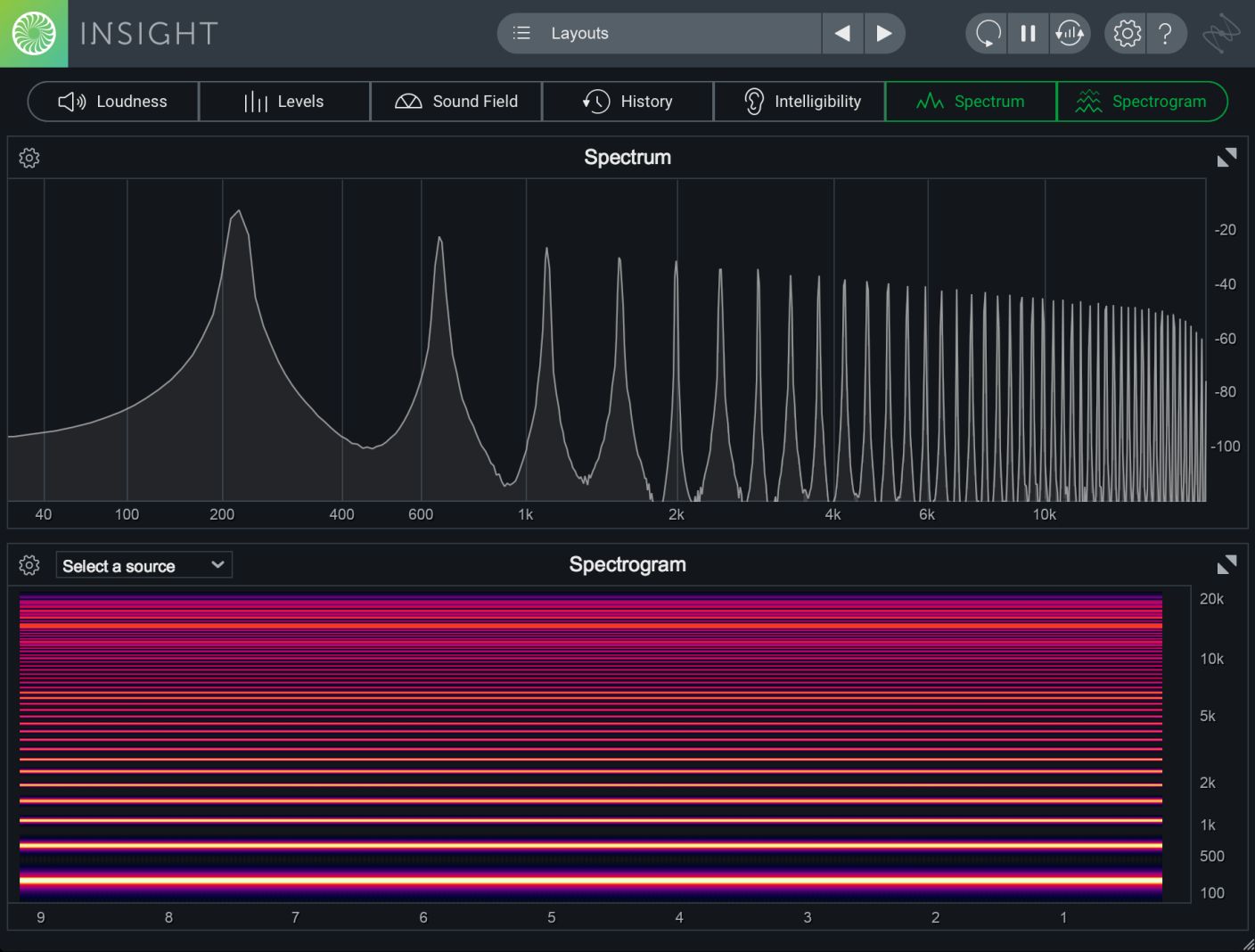

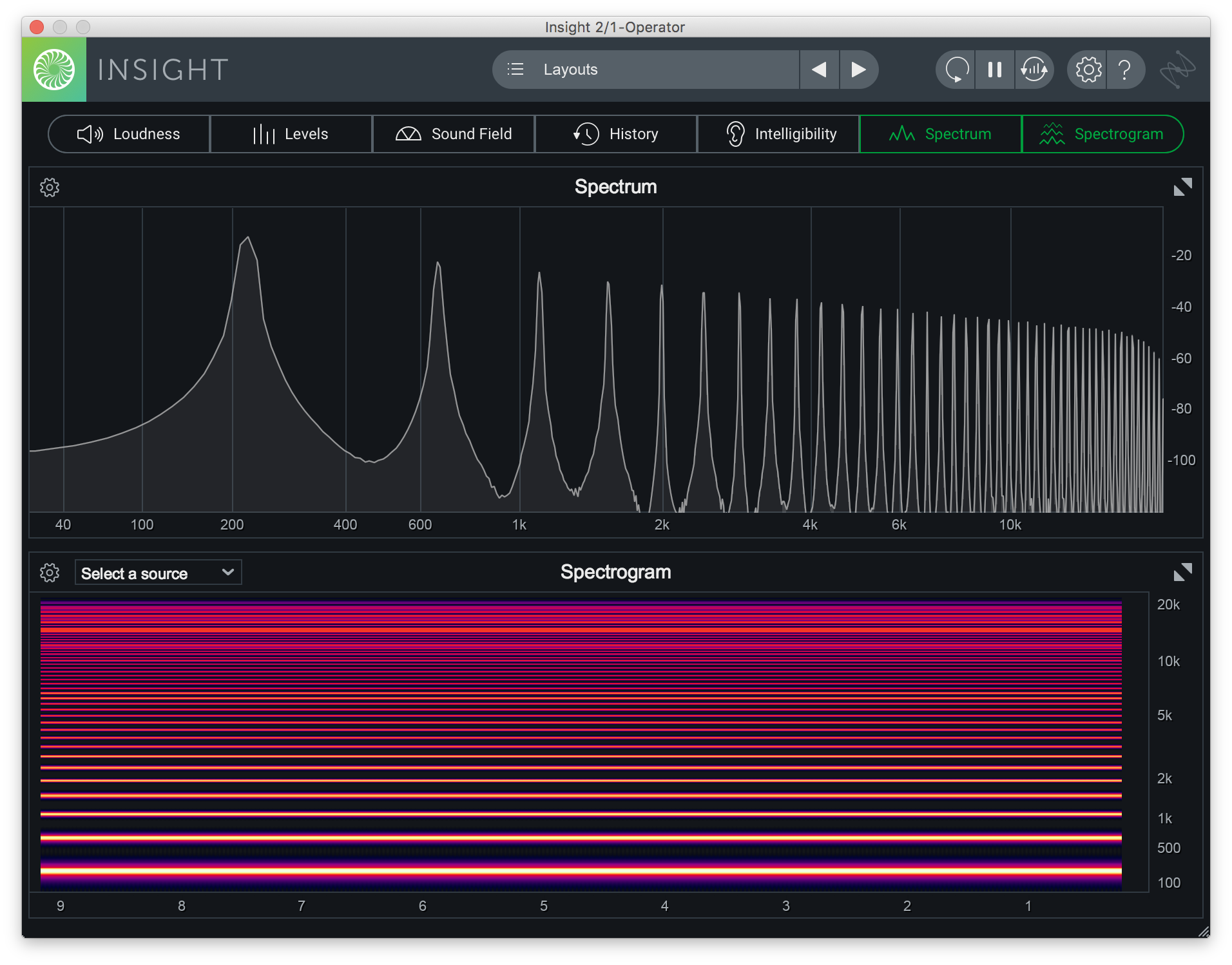

The same note played on a square wave oscillator produces a much busier spectrogram visual. The fundamental still vibrates at 220 Hz, but now it’s joined by a series of lines above it, which represent harmonics. Harmonics are frequencies that are a multiple of the fundamental, and their structure in a signal largely determines how we hear timbre. A square wave has “odd numbered” harmonics because they lie at odd multiples of the fundamental frequency. For example, 660 Hz (220 x 3), 1100 Hz (220 x 5), 1540 (220 x 7), and so on.

Signal amplitude is represented by changes in color. The loudest parts of a signal have the brightest lines and the quieter parts of the signal are indicated by darker lines. We can see that the loudest frequency is the fundamental, and the harmonics decrease in loudness as they increase in frequency. The spacing of odd harmonics in a square wave—with less information in the mid-range—contributes to a hollow and cold timbre similar to that of bells and chimes.

Square wave visualization in Insight 2

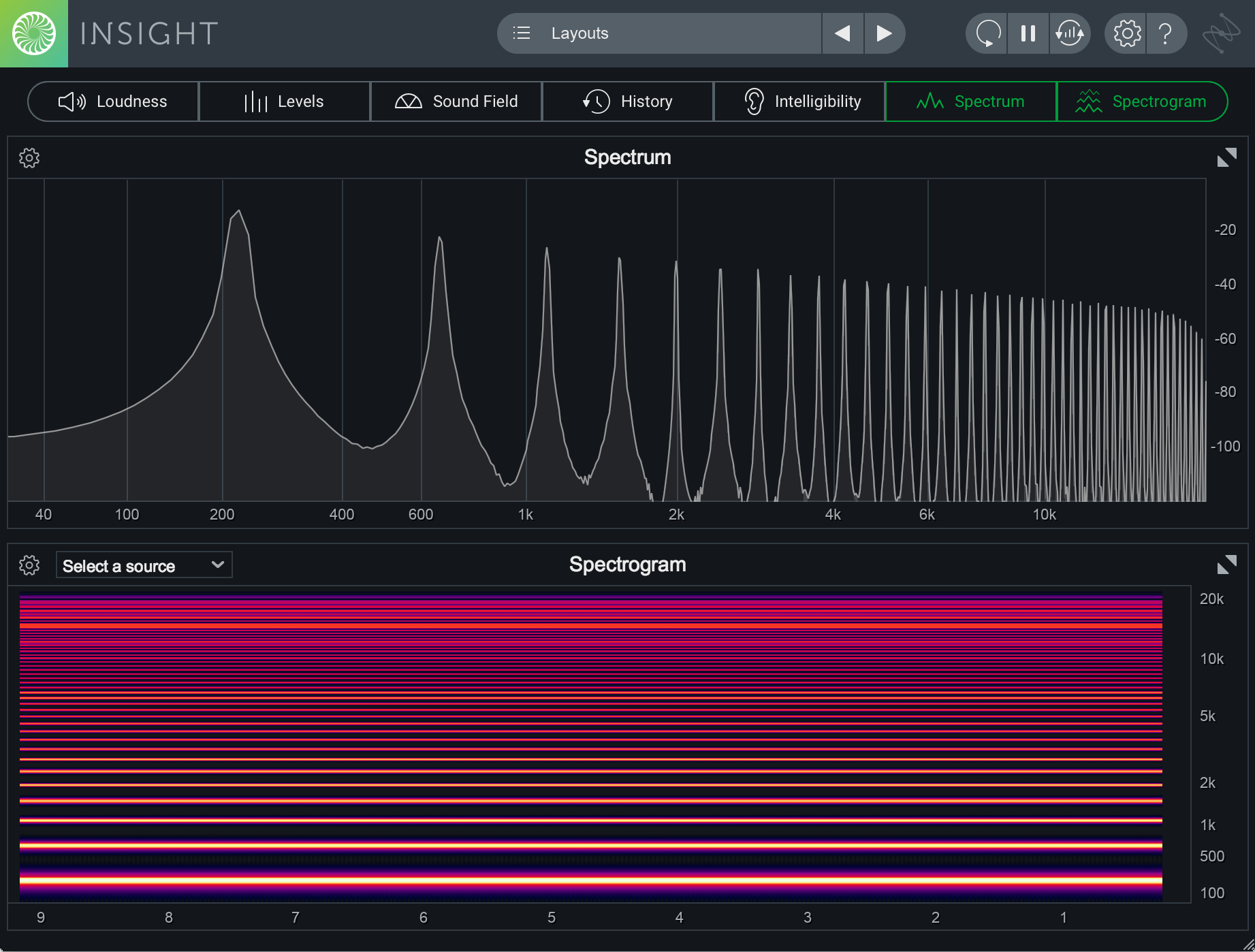

The timbre of a square wave can be recreated with a sine wave and clip distortion—see the spectrogram below. When distortion is introduced into a signal, it generates new harmonics that add color. For a warm or wooly timbre, low distortion values are ideal. A noisy timbre is produced with extreme distortion values.

Sine wave with distortion

The harmonic content of a sawtooth wave is even richer than a square wave, particularly in the high end frequencies, and it includes a mix of odd and even harmonics. Even harmonics lie at even multiples of the fundamental and the difference in frequency between them constitutes an octave—440 Hz is one octave higher than 220 Hz, 660 Hz is one octave higher than 440 Hz etc. The timbre of a saw wave is bright and buzzy and becomes harsh when unfiltered. Because they contain all harmonics in the series, sawtooth waves are the best candidates for subtractive synthesis

Sawtooth wave visualization in Insight 2

The most common move in subtractive synthesis is to shape a rich harmonic signal, like string pads, from a brilliant to mellow timbre by closing and opening the filter. And it looks a lot like how it sounds.

Lowpass filter

Timbre over time

So far, we have looked at how the fundamental and harmonic frequencies of a sound influence timbre, mostly with simple, static waves. Like I mentioned earlier on, timbre is also greatly affected by the envelope of a sound. An envelope shapes the loudness and spectral content of a sound over time using four parameters: attack, decay, sustain, and release, abbreviated as ADSR.

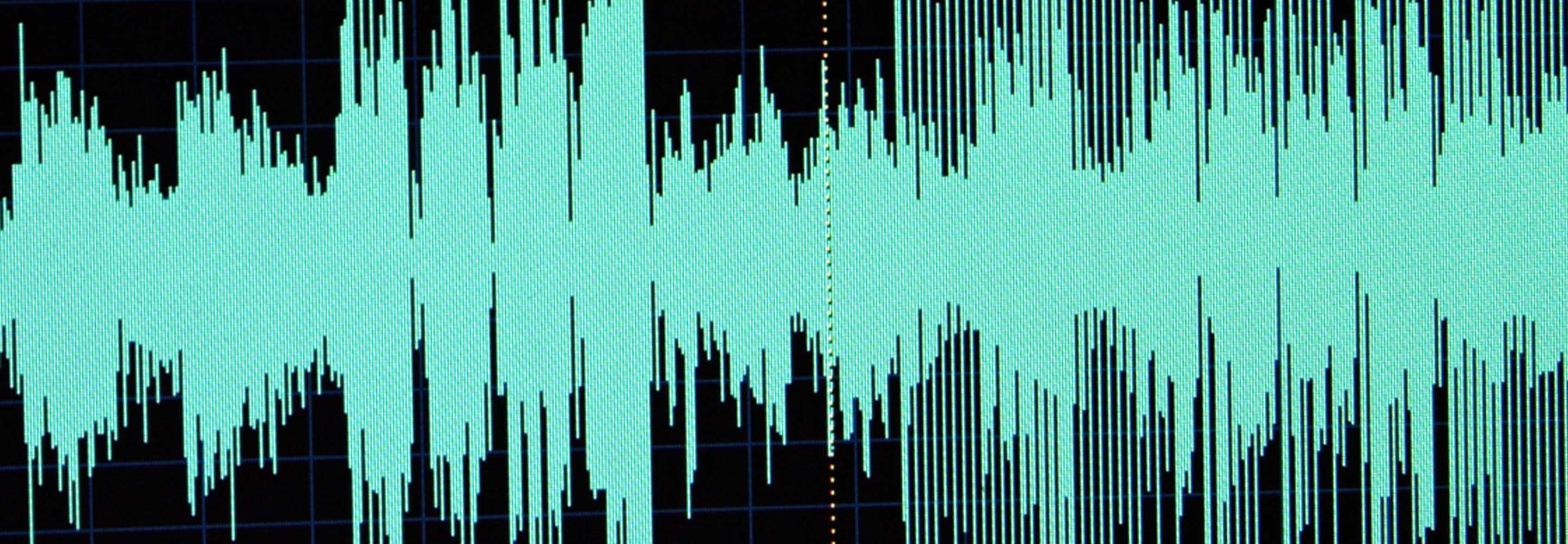

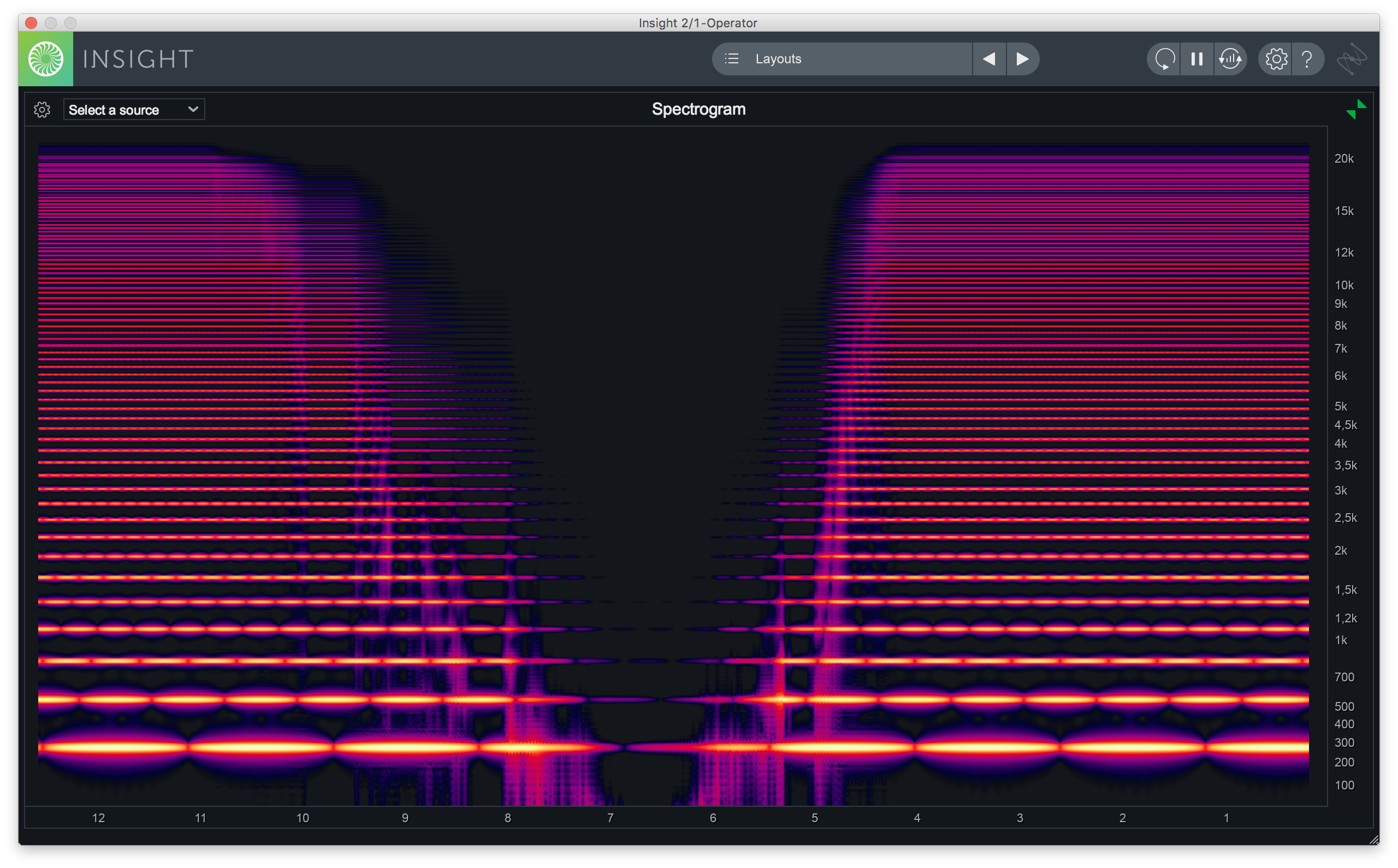

To get a better understanding of how envelope relates to timbre, take a piano recording and reverse it. When the piano plays forward in time, we hear and see that each note goes from silence to its maximum amplitude immediately after being triggered. The decay time is fast, and the sustain level remains constant until the note is released—a recognisable piano envelope.

Piano playing forwards in time

Playing the piano recording backwards reverses the envelope structure. Each note has a gradual increase towards its maximum amplitude, then an abrupt drop to silence. The frequency distribution and intensity of each note is the same in both directions, but the backward timbre is completely different. The resonant frequencies in the recording are emphasized and the reversed piano hammer introduces a strange, flickering texture.

Piano playing backwards in time

Vocal timbre

The human voice is one of the most recognizable sounds around us. We rely on the timbral characteristics of a voice to determine gender and age and to pick out the familiar voices of friends and family in crowded spaces. Just as the shape of an acoustic instrument determines the timbre it produces, the shape of a person’s vocal cords, vocal tract, nose, as well as the rest of their body determines the timbre of their voice.

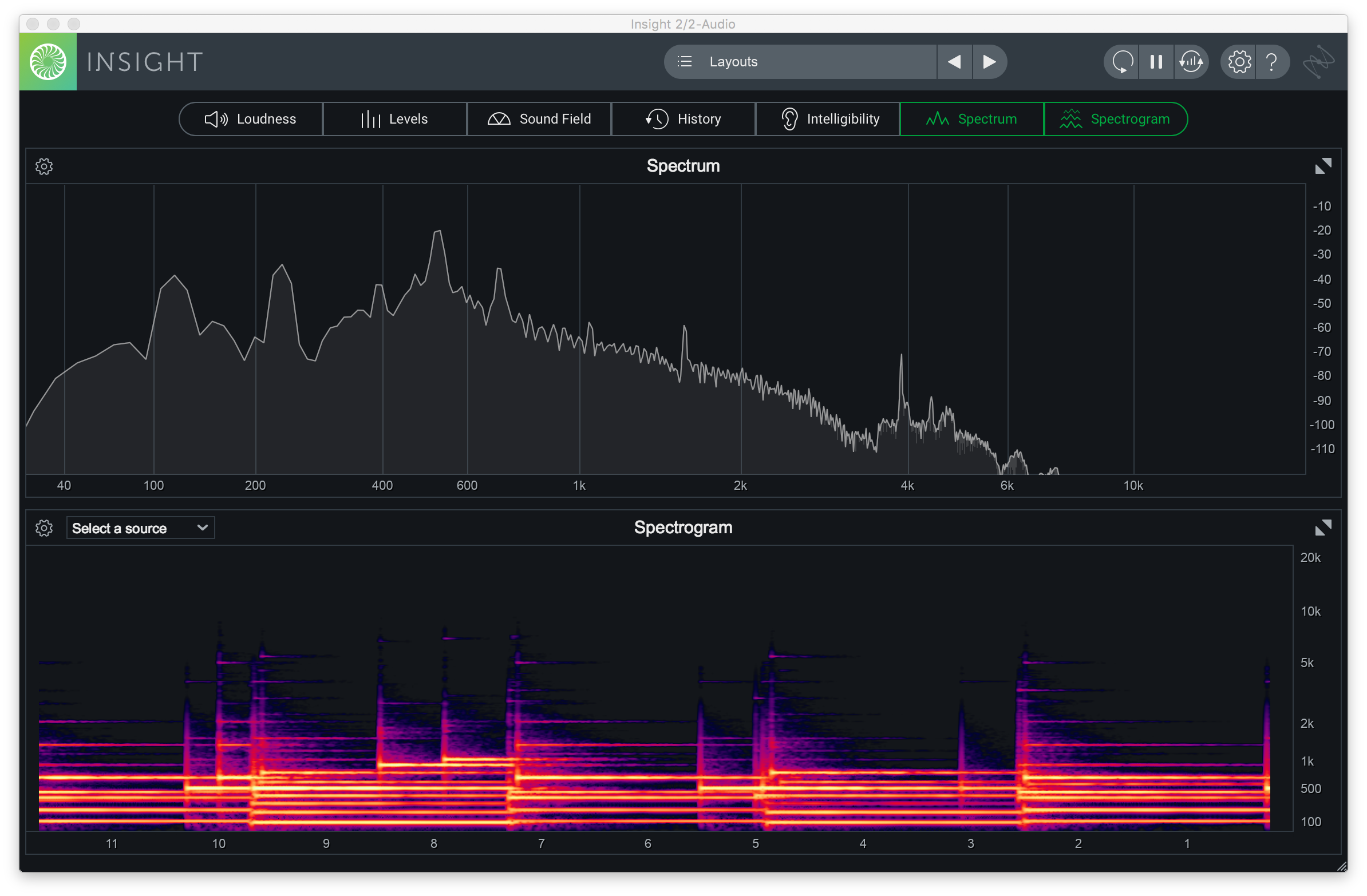

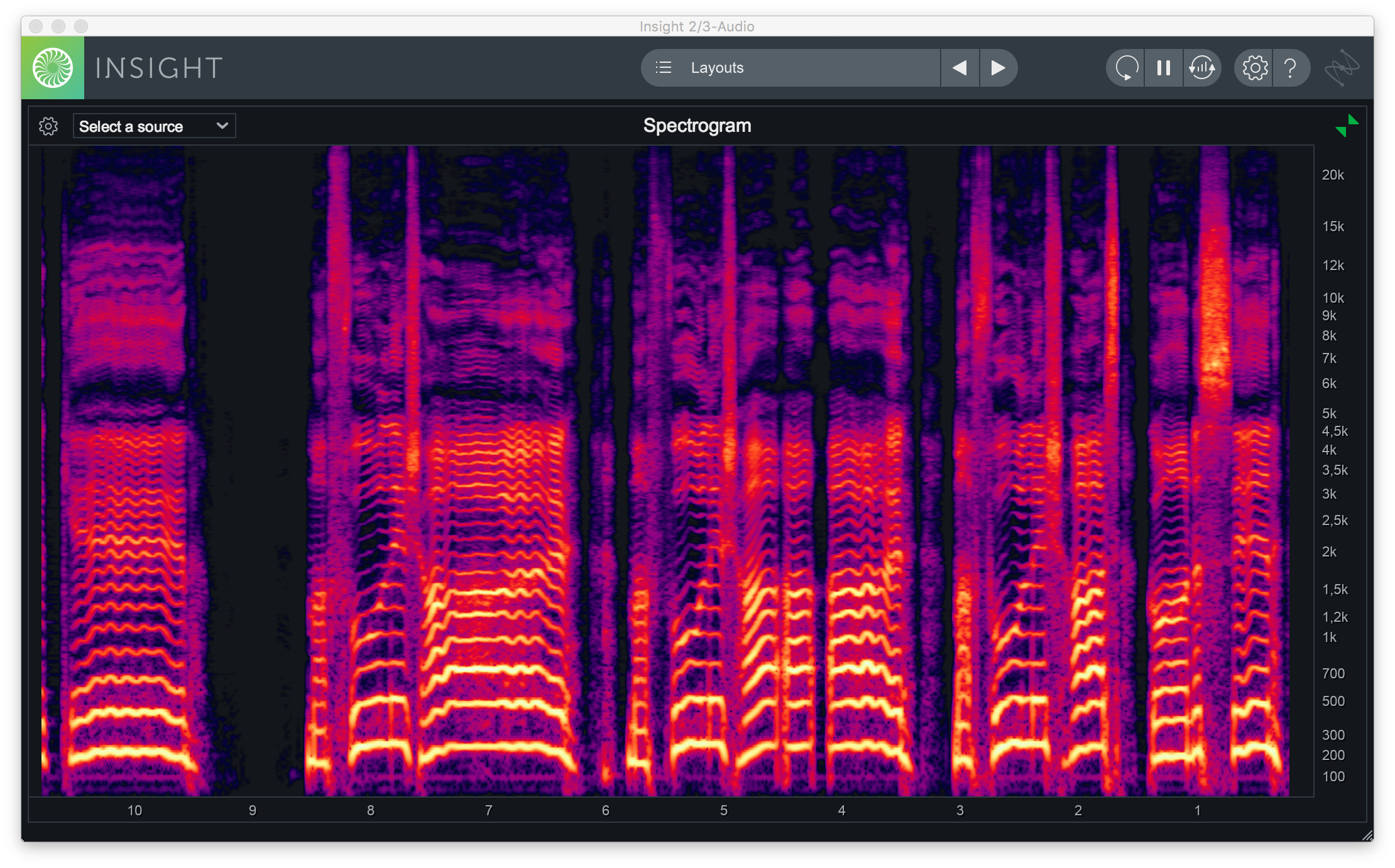

Connecting timbral characteristics of the voice with frequencies is an essential skill for for recording, producing and mixing. The emotional transformation of a song is reliant on changes in vocal timbre. To make a chorus sound more energetic, a vocalist will sing softly and in a lower register during a verse for contrast. This style of singing in the verse produces a dark or mellow timbre.

Verse vocal timbre

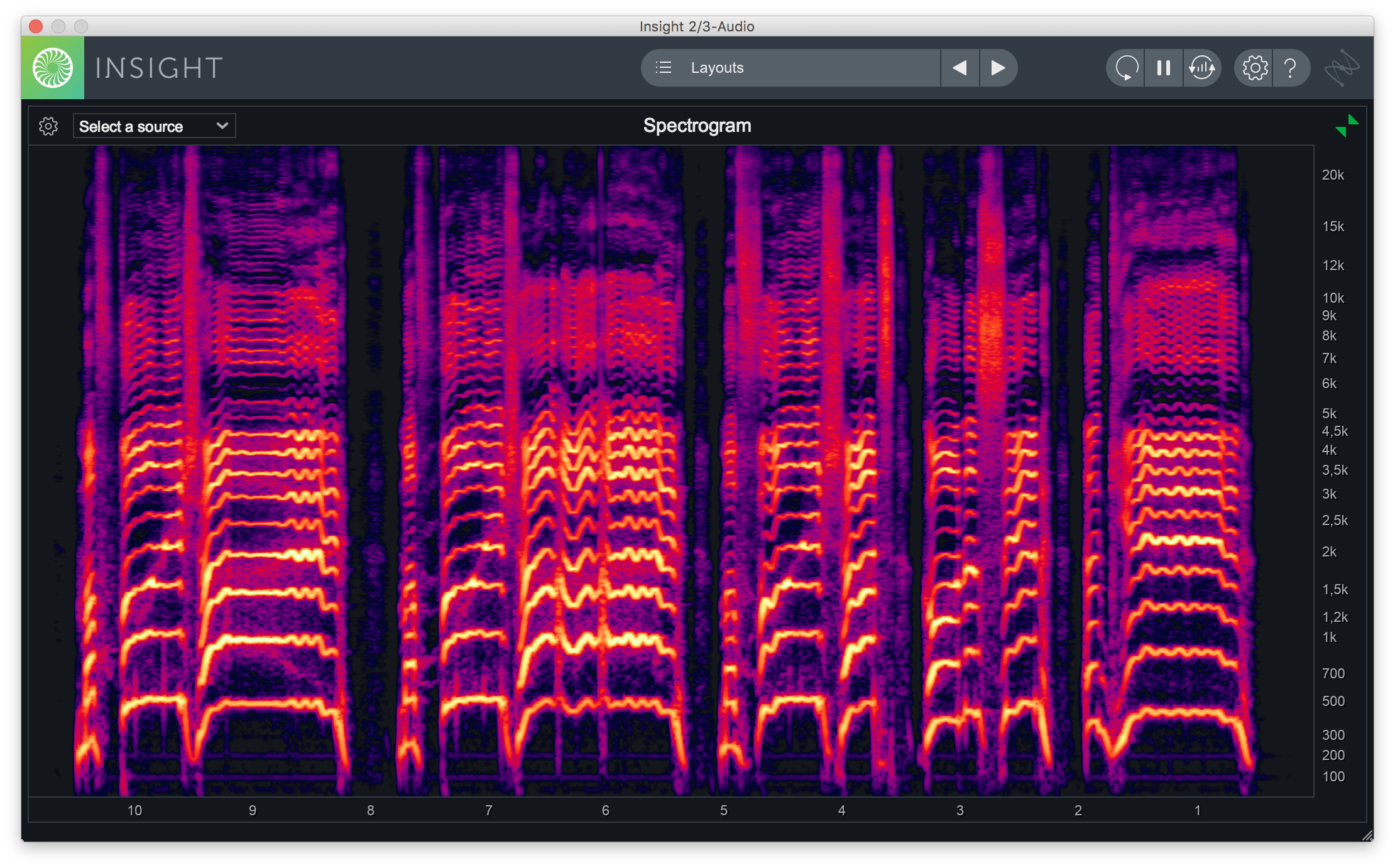

In the spectrogram of the chorus vocal below, harmonic intensity remains high until 5 kHz, whereas verse harmonics begin to taper off around 2 kHz. There is more high frequency information in the 7–12 kHz range here too.

Chorus vocal timbre

No voice can properly sit in a mix without a bit of enhancement. With a careful selection of effects we can improve the timbre of the voice without changing its natural character too much. I applied a few EQ cuts where the vocal was too sharp, and added in light compression and pitch correction. The vocal sounds livier while staying true to the original performance.

Conclusion

A familiarity with timbre in music gives us a deeper understanding of common studio effects like distortion, filtering and EQ, as well as how to synthesize new sounds. Use the spectrogram in Insight 2 to analyze the timbre of interesting sounds and instruments and learn what makes them sound unique. This will greatly improve your critical listening skills and improve the songs you work on.