What is sound? Sound explained

What is sound? Explore the science of sound: its creation, transmission, and characteristics like frequency and amplitude.

Sound: we hear it everywhere. But what exactly is sound? That’s what we’re going to talk about today. Why? Because diving into this fundamental question is essential if you want to further your art. Yes, defining “sound” can become both confusing and technical. In the wrong hands, it can be boring. But powering through can only help you along your audio journey.

You can glean an enormous amount of inspiration from understanding the science of sound. Once you know how sound works—and why it behaves the way it does—the work of eliciting emotion through sound becomes faster, more intuitive, and more fun.

So, let’s get started exploring the physics of sound. We shall see how the science directly impacts our art.

Follow along using our free Ozone 11 EQ.

Sound waves: the building blocks of what we hear

At its core, sound is the vibration of particles in a medium such as air, water, or solid matter. These vibrations travel as waves, and when they reach our ears, they’re interpreted by our brains as sound. A sound wave can come to our ears in different ways—and at different speeds—which influences what we hear.

This seems simple enough to grasp, until you realize that sound isn’t the only thing to travel in waves. We feel the impact of earthquakes through seismic waves. Light reaches us through the propagation of waves too, as do terrestrial radio signals and cellular networks. The very word “wave” is what we use to describe the movement of the ocean.

All of this begs a larger question:

What is a wave?

Physics is often obsessed with waves and particles. In a very general way, we can make broad definitions of what constitutes a wave and what constitutes a particle. But first, a disclaimer I feel compelled to include:

At the very edges of knowledge—where physicists debate high-minded concepts like String Theory, M-Theory, Loop Quantum Gravity, and other feats of mathematical enterprise—the differences between waves and particles often break down.

All of this is to say, we’re simplifying things here. If you want to get super duper in depth about quantum mechanics, this is not the article for you.

Now, onto waves:

We can define a wave as an oscillation or disturbance, one that carries energy from one place to another. Waves move, and they move in very specific ways. Overlapping waves, for instance, can combine to create a new and more powerful wave. This is called constructive interference.

On the other hand, two waves can effectively cancel each other out, which is called destructive interference. Anyone who’s ever tried to multi-mic an instrument might very well understand this on an intuitive level.

Mechanical waves versus electromagnetic waves

Broadly speaking, waves are either mechanical or electromagnetic in nature (again, we’re keeping things simple and leaving quantum physics out of this).

An electromagnetic wave requires no medium to propagate. An electromagnetic wave can travel through the vacuum of space and be observed by the naked eye. Starlight is a good example of this. Starlight travels across lightyears of vacuous distance and reaches our eyes, regardless of our atmosphere. Radio waves are much the same in this respect.

A mechanical wave, on the other hand, requires a medium to make itself felt or heard. Feeling a seismic wave in an earthquake is impossible without the medium of solid ground.

Sound operates in much the same way: you hit a drum with a stick, and the drum vibrates. When the drum vibrates, it pushes and pulls all the surrounding air molecules, creating a wave of pressure changes. These fluctuations compress and decompress the air, moving outward like ripples on a pond. The result? A sound wave, classically described.

How sound waves work

When an object vibrates—whether it’s a plucked guitar string or a blown saxophone reed—it transfers energy to the surrounding particles, setting them into motion. This energy moves outward from the source in waves, pushing and pulling the air molecules all around.

These molecules experience regions of compression (where molecules are packed closer together) and rarefaction (where molecules are spread apart). These pressure variations ripple outward from the source, carrying sound energy through the air to our ears.

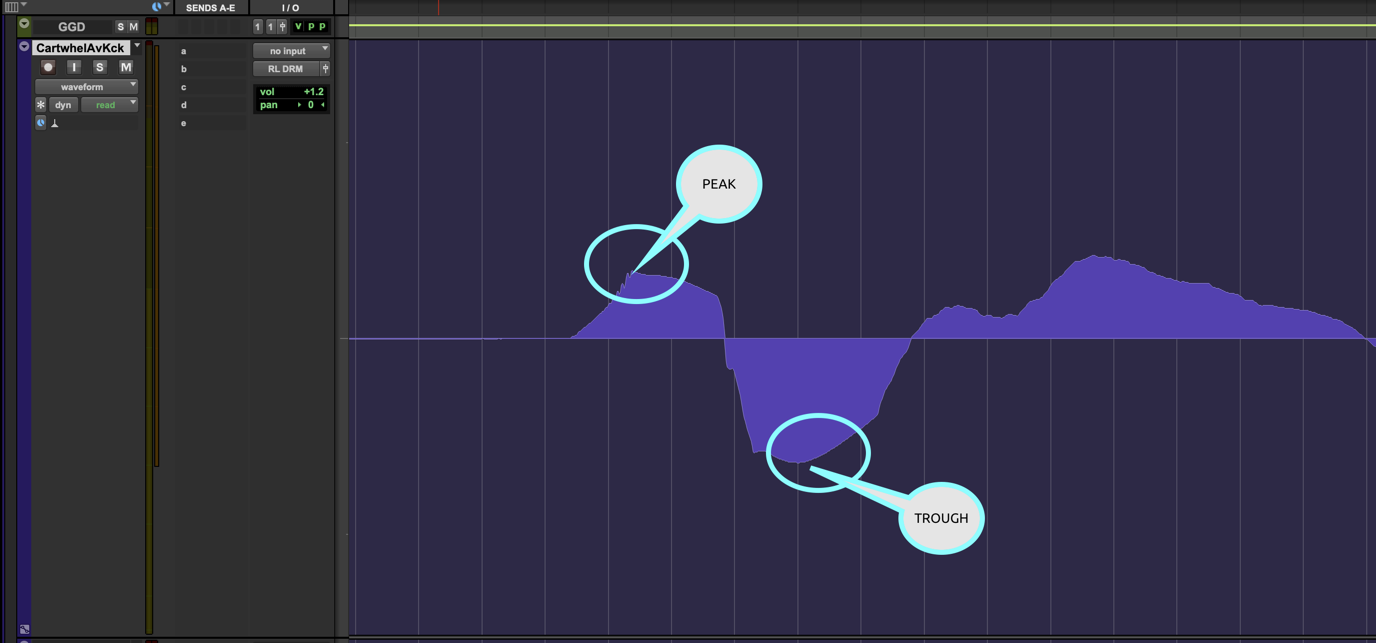

Compression and rarefaction are actually represented in the waveforms you see in your DAW.

peak and trough

The peak represented in this image is the compression, whereas the trough is the rarefaction.

We call the distance from one consecutive peak (or trough) to another a “wavelength.” We call the overall duration of a sound wave a “wave cycle.” The two are somewhat connected.

How long a wavelength is depends on its frequency, which brings us to our next points of concern: frequency and amplitude, as they apply to sound waves.

Frequency and pitch

In scientific terms, frequency refers to the number of complete wave cycles that pass through a given point in space during a given unit of time. “Cycles per second”—more commonly called Hertz (Hz)—is the most common measurement we use. 20 Hz is shorthand for “20 wave cycles per second”

In pure artistic and musical terms, frequency usually means pitch. Indeed, the faster the sound wave vibrates, the higher its frequency, and in turn, the higher the pitch we hear.

For example, a low bass note might vibrate around 60 Hz, while a high-pitched whistle could reach up to 8,000 Hz or greater. Our ears are incredibly sensitive to these changes, which is why we can easily distinguish between the deep thump of a kick drum and the bright shimmer of a cymbal.

In audio production, we engineers shape frequencies with tools like equalizers and saturators. Any given sound is made up of complex waveforms that carry a whole swath of frequencies. Some of them are necessary to the mix, and some of them are not.

So, we use equalizers to shape these frequencies. Muddy guitar? Cut around 300 Hz. But here’s the thing: 300 Hz also roughly correlates to the note D—specifically, D4, as defined in Western temperings.

When you intuitively understand the relationship between frequency and pitch, EQing becomes easier. You hear that annoying resonance in a keyboard track, become able to identify it as a whistling singular pitch, and cut it dramatically within seconds.

Another example:

Say you know the lowest note played by the bass player on a particular tune is G1. You’ve also made the decision that you want the kick to occupy the subs in this particular mix.

If you know that G1 is roughly 48 Hz, you can rest assured that high-passing the bass at 48 Hz is the correct move to make room for the sub information on your kick.

By understanding how different frequencies correlate to pitch, you can quickly control which elements in a mix stand out.

Amplitude and “volume”

Amplitude is the measure of a sound wave’s intensity. It directly correlates to the word volume—and all the terms we use to avoid the word volume: gain, level, and trim all stand in reference to a sound wave’s amplitude.

Essentially, amplitude represents the size of the wave—or more accurately, the degree to which air molecules are compressed and stretched as the sound travels. A larger amplitude means the wave carries more energy, which results in a louder sound. In contrast, a smaller amplitude produces quieter sounds, as less energy is being transferred.

Think of amplitude as the height of a wave on the ocean: the taller the wave, the more powerful it feels, and the louder the sound appears to our ears.

In audio production, managing amplitude is half the job. Too much amplitude can result in unwanted distortion or clipping at key places in the chain, where the sound exceeds the limits of the processing device or playback system.

Too little amplitude can cause important details to get lost, or to disappear entirely against the self-noise of a hardware processor with a noise floor. This is where tools like faders, trim pots, compressors, and limiters come into play. They allow engineers to control amplitude, ensuring that all elements in a track sit comfortably within a desired volume range.

And of course, imposing an envelope of amplitude on an already established sound wave can reshape its rhythm and groove. This is the reason many engineers like to use compressors.

Art in the science

Now that we’ve covered the basic way mechanical sound waves work, let’s dive deeper into how they affect you as a producer or engineer. We’ve already covered frequency and amplitude, so let’s move on to some other concerns.

Sound waves and mic placement

The behavior of sound waves is crucial to understand when positioning microphones. Because sound waves are longitudinal, they behave differently depending on their frequency and the environment.

For example, low frequencies are often perceived as more omnidirectional than higher-frequency sounds. In practical terms, this means the placement of microphones can dramatically affect the sound captured. Positioning a mic too close to a source might exaggerate certain frequencies; we call this the proximity effect, and it can be used to great advantage or detriment.

How sound behaves in a space also changes our technique. Use a stone tiled room for your drum kit recordings, and you’ll have a very reverberantreveberant, reflective sound. Use a carpeted room, and the sound will usually be drier. Careful mic placement ensures that the original sound is captured as accurately and cleanly as possible, laying the foundation for a strong mix.

Sound energy and acoustic treatment

As hinted at above, sound energy interacts with the environment in ways that can either enhance or degrade the quality of a recording. In a studio setting, sound energy bounces off walls, ceilings, and floors, creating reflections that can muddy the overall sound.

To counteract this phenomenon, music producers use acoustic treatment such as acoustic panels or bass traps to manage how sound energy moves within a room. By controlling these reflections, acoustic treatment ensures that what you hear in the room is a more accurate representation of the recording, making mixing decisions more reliable and helping producers create a clean, focused sound.

The room itself shapes the sound: you may have heard of room modes, for instance. You might have been told to avoid mixing in square rooms, or to keep your desk in the front or back third of a room, depending on the size.

I like to think of rooms as shepherds of the sound at large: they direct the course of events.

Sound behavior and phase

When working with multiple microphones or stereo recordings, understanding the phenomenon of destructive interference is crucial for achieving a balanced mix. Destructive interference in sound refers to sound waves canceling each other out—which becomes a problem in phase.

If two waves are out of phase, they can cancel each other out, resulting in a thin or hollow sound. In stereo recording, phase issues can affect how instruments and vocals sit in the stereo field, impacting the overall depth and clarity of the mix. By carefully aligning sound sources and using tools like phase alignment plugins, producers can avoid phase cancellation and create a wide, immersive stereo image that enhances the listener’s experience, whether they’re using headphones or speakers.

Learn more about sound

As I hope you understand by now, the physics of sound is more than just academic—it’s a crucial tool for any audio producer or engineer. By grasping the fundamentals of sound waves, frequency, and amplitude, you can make more informed decisions in every aspect of production, from mic placement to mixing and mastering.

Whether you’re managing room acoustics, avoiding phase issues, or sculpting the perfect EQ curve, your knowledge of sonic physics directly impacts the art of creating music. Armed with this knowledge, you’ll find yourself able to solve problems faster, create more balanced mixes, and ultimately, bring more emotion and impact to your work.