Runtime DSP, It’s Tricky: Video Game and VR Audio with Brian Schmidt

Video game audio veteran Brian Schmidt talks with iZotope about the top trends of today and tomorrow in video game and VR audio.

With over thirty years of video game audio experience, including ten of them leading the audio technology department for Xbox at Microsoft, Brian Schmidt knows a thing or two about audio. As he writes on his site, “Since I started in 1987, I've witnessed (and helped transform) the industry from ‘bleeps and bloops’ to the current surround sound, high power, high fidelity systems we have today.”

Schmidt, the founder and executive director of GameSoundCon, an industry-leading conference focused on video game music and sound design, was kind enough to speak with iZotope about 2017’s top audio trends in video game and VR audio, as well as how the development of runtime DSP technology has helped solve two of the biggest creative challenges in game audio today.

What are the top trends in 2017 for creating sounds and music for virtual reality and game applications?

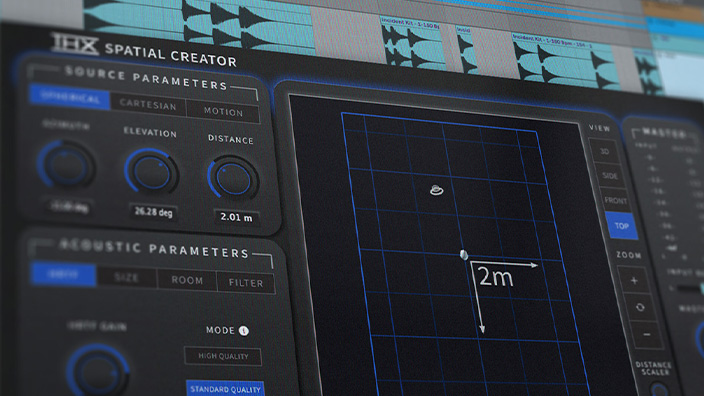

One thing I’m excited about is how the traditional pro audio industry has taken a second look at us in the game industry. Pro Audio tools such as Nuendo 8 have new features specifically aimed at interactive content creators; Game Audio Connect is another workflow improvement, as well as seemingly mundane features as automatic naming schemes and adding version control using Perforce. Features like these go a long way towards making sound designers and composers work more efficiently—and in games, as with other media, efficiency means more iterations, which leads to better results. Companies like iZotope have tailored their tech to be used within games. And of course, there’s the impact virtual reality has had on game tools and processes. FMOD Studio now ships with a 3D HRTF spatialization technology from Google, and there are many other options for 3D audio spatialization.

One of the more interesting although perhaps less sexy things was the recent release of the Wwise Authoring API. Although it doesn’t really break new ground in game audio technology and features per se, it has the potential for really improving workflow. And, as dull as it sounds, workflow/pipeline can be one of the largest roadblocks to creating a great sounding game. There are already people using this technology to extend the capabilities of products like REAPER or Soundminer to facilitate and improve game audio workflow.

For VR/AR, there’s a lot of great work being done in the creation of virtual audio environments. In games, for a very long time now, we have relied on faking it when it came to putting the player into an acoustic world. For example, we’d tag rooms with parameterized reverb presets, and not really make any attempt at recreating the physical environment. Similarly, we would use clumsy, overly simplistic methods for simulating the effect of a sound diffusing around the corner, or going behind an object. Proper sound propagation and diffusion simulation used too much CPU, and generally didn’t provide enough perceptual bang for CPU buck.

But in VR, these kinds of approximations can hurt the effect we’re all trying to create—placing someone in an acoustic world in the same way the graphics immerse the player in the visual world. Conflicts become visuals and audio become easier to spot, leading to kind of cognitive dissonance.

Recently, really good work has been done in doing these calculations offline or in the cloud ahead of time, or even on graphics processors to lighten the burden on the game system itself. So I’m excited to see those technologies start to get used in the coming year.

What’s an example of an excellent audio experience with VR today?

Job Simulator comes to mind. The sound designers have figured out how to use these technologies within the limits of what they are able to achieve, and not try to push them past that. For example, using current technology, elevation effects are much more difficult to reliably create than horizontal effects. So a good VR application will emphasize lateralization and not have a gameplay element that requires a player be able to accurately hear a sound above them or down by their feet—the tech just isn’t quite there yet.

Equally as important, Job Simulator gives an attention to detail that you need to pull of convincing audio for virtual reality. For example, one of his virtual objects (the ‘bot’) is made up of multiple sounds, each of which are given separate 3D roll-off curves. Virtual reality is an audio microscope—even the smallest problems are magnified by it.

And finally, the needs of the game come first: “sounds right/is fun” wins over “is an accurate physical simulation.” Those are the sorts of things that all go into making a really compelling VR audio experience.

That said, we are just getting to the point where some of the tools and technologies are becoming streamlined enough to do something excellent, but I’m not sure we’re there yet. The various spatialization technologies are all very cool, but they for the most part aren’t all that different than some of the early work done by Kendall and Martens 30+ years ago. It turns out that 3D sound perception is incredibly complex, and we haven’t really been able to create technology that reliably can pass the “audio Turing test”—where you literally could not tell the difference between a real sound and an artificial one.

What are some things that aren’t possible today in VR sound but might be in the future?

People sometimes think there’s a line between creating a sound and placing that sound into a VR space. However, the two are very much interdependent. As our simulated worlds become more realistic, we’re going to need to move away from simply playing audio files to represent those sounds—improvements in physical modelling synthesis, or physics-guided DSP processing of sounds will be part of the standard toolbox in the future.

Real-time, accurately physical-modeled environments is something that currently will bring a current-gen CPU to its knees. For example, doing a truly accurate real-time acoustic model of a cathedral and tracing all the reflections down to their -60 dB point requires massive computation. At some point, CPU cycles will be cheap enough to do even a great concert hall justice, allowing you to virtually sit in any seat in the house. But that’s beyond what we can do right now, even though we know pretty much how we’d approach it.

In this article, Ben Minto writes about the future of game audio. He discusses how our real world often gets “wrong” what we might expect to be a true representation of sound. He says, “Working in a built-up city, I’m still surprised by how often physics gets it ‘wrong’ when a helicopter flies overhead or an ambulance approaches from a distance. All the ‘conflicting’ reflections from the buildings make it really hard for my brain to pinpoint where the sound is coming from, its path and also its direction of travel. Is this something we want to replicate in our title or do we want to bend the rules to make the scenarios more readable?”

Yes, this is one of the big challenges we have in games and VR. Because we rely on a lot of automated processes (3D audio engines, game engines, etc) to create our audio world, occasionally all that tech will very faithfully correctly render an audio scene, but the result just sounds bad, or worse, misleading, as in Ben’s example of not being able to localize a helicopter.

Traditional media doesn’t have this issue because you have exact control over the mix at all times. But in VR or games, there are an almost infinite number of potential mixes, and you can’t listen to every possible one.

If you have a gameplay element that requires you knowing where the helicopter is, that’s going to be frustrating and not fun if the audio hinders, rather than helps the player. So sometimes you have to bend the rules and override what the software is saying the “correct” processing (reverb, reflections, filtering etc) should be.

I know localizing sounds is a topic you touch on in your blog, "9 Things You Should Know When Creating Sound for Virtual Reality." My question is, how at will should VR and 3D game sound designers feel to “bend reality’s rules?”

It depends on what you’re trying to achieve. If you are creating an entertainment product, then the criteria should be “does this creative decision make the game more fun and engaging?” If it does, then that one wins, regardless of how “correct” it is.

If you’re in a large virtual cave, it’s far more important to create an eerie feeling of being in a giant cave, probably with some massively exaggerated reverb, than it is to accurately model the reverb within that specific cave.

A key point is to think “what is my reference of reality.” Think of the cave example above. Most people have never actually been in a large cave, so they have no idea what it would truly sound like. However, most people probably have a pretty good idea of what they think it sounds like to be in a giant cave—maybe they remember a movie they saw, or they just try to imagine or extrapolate. In this case, what is the goal? To be “real?” Or to convince the player that is “real?” Maybe by being literally “real” destroys the player's immersion, rather than reinforcing it. I’d argue that in most circumstances matching expectations is more important than matching physical reality.

And of course, there’s the reality we break all the time in both VR and games—sound propagation delay. For example, if I see a player kick a soccer ball from half a football field away, physics says I should delay the sound by 150 ms. But if I do that, things feel less real—even broken. It turns out that if you add “realistic” sound propagation delay to games or VR, it just sounds wrong.

Now if you’re trying to use VR to see how a yet-to-be-built cathedral will sound, or emulate the sound of the great concert halls of the world, that’s an entirely different set of criteria used to make your decisions. For those I would yield to accuracy.

What other challenges does Virtual Reality audio present?

One of the big challenges we have in virtual reality audio is that, in nature, we really are not good at all at using sound alone to pinpoint the location of objects. Unlike how our eyes work, there is no one-to-one mapping between where an object is in the world and neural receptors. Our ear/brain system is good at determining frequency content of sounds moment to moment and combining the signals from our two ears and analyzing the difference between the them. But that’s it. From those pieces of information, we have to infer from those items where we think an item might be located in space. And there may or may not be complementary or opposing cues from our other senses.

3D hearing is a very complex, interdependent phenomenon, which isn’t only DSP/filtering, but involves learned expectations as well as input from our other senses.

I love to show students a video of Grover demonstrating “near and far” and ask them how the sound changes as he moves from “near” to “far.” Most identify “he gets softer as he gets farther away,” “he sounds more reverberant” and even, “he sounds a bit more muffled.” But almost no one gets “when he is far away, he is shouting, but he is still soft.” So making a voice sound “far” isn’t just taking a “near” recording of a voice and applying a “sound-far-away” DSP process on it, but it also is intimately tied together with our expectations of the timbre and sound quality of near and far away human voices. Not even the very best HRTF processor can do that.

How does having runtime DSP in games affect the user’s experience?

Runtime DSP is a huge leap in game audio, and I’ve been incredibly happy to see it occur over the past few years. It is another example of the pro audio industry paying attention to games. Runtime DSP helps solve two of the big creative challenges we have in game audio: repetition and interaction with the environment.

Repetition is an issue in games because we are actually pretty good at recognizing when we hear the exact sound twice. Prior to the advent of recording, that literally never occurred in human experience. No vocal utterance or gunshot or sound of garbage cans crashing is ever exactly the same. But in games, when we play back WAV files, we present the same sound; this breaks the illusion we’re trying so hard to create. By using DSP in games, we can alter—subtly or not so subtly—how sounds sound each time they’re played. So rather than hearing the same dinosaur roar over and over, we can take an animal sound and pitch shift it, flange it, whatever, using run-time DSP effects. And each time we need the dinosaur to roar, we can pick different DSP parameters with different automations, giving us a unique sound each time it's heard. That’s only possible with in-game, runtime DSP effects. DSP gives tremendously more flexibility in our sound effects generation.

DSP also lets us tailor sounds to specific environments of contexts. For example, suppose I have character dialog, but that character may or may not be using their radio to communicate. By storing the unprocessed dialog and running the “radioize” DSP at runtime, that gives us far more flexibility and even allows for cases where a line starts radioized, but mid-sentence the player might turn off their radio because we’ve gotten close enough to them to hear them “acoustically.” And of course, now we can emulate damage to the radio, also by using some DSP techniques that trash the sound or otherwise degrade it.

And of course, this doesn’t even touch some of the Virtual Reality issues we’ve already discussed.

I’d love to hear your thoughts on how exactly making professional-grade DSP effects available to game developers pushed the industry forward.

Back in 2000 at Microsoft, we tried very hard to get run-time DSP effects into games for the reasons outlined above, and designed the Xbox audio system accordingly. We even put in a dedicated 56000 DSP chip specifically to support these effects—we knew that pro audio DSP companies were very familiar with that chip and had experience programming them. And there were a couple attempts to use custom DSP effects, but in the end it was a bit too cumbersome to use and the industry wasn’t quite ready for it.

The emergence of game audio engines/platforms such as Wwise and FMOD, combined with the increase in CPU power and memory finally enabled people to be able to create DSP effects and put them directly into game engines. One other thing we’re starting to see is DSP effects specifically designed for the game industry—for example there are effects available designed to create sound variations or to specifically create creature sounds. That to me is very exciting!

In your article “6 Surprising Facts About Composing Music for Video Games” you write, “Because you don’t know when various story elements will actually happen (it depends on the player), when composing for video games you have to write your music to be flexible, and know how to quickly change from ‘wandering around’ music to ‘battling for your life,’ while not sounding obvious or abrupt.” How can composers handle this context-switching naturally?

There have literally been whole books written about this! Part of the fun of working in games is that we’re still figuring this all out…What does it mean to take music—an inherently linear medium—and integrate it into video games—an inherently non-linear medium? Some of the techniques involve breaking music into small(ish) segments from a couple measure to several full musical phrases. As the game plays, special software in the game called the “audio engine” take those smaller segments and line them up to play. Sort of like Mozart’s “Musical dice” game, but with a bit more finesse.

Another technique is vertical layering. Imagine you’re doing a horror game and you’d like to be able to adjust how creepy the music is. You might record some aleatoric high string parts. When the music plays normally, though, you keep these muted. Only as the player walks down the increasingly scary hallway might you start to slowly fade in those string tracks.

Those two techniques, “horizontal resequencing” and “vertical resequencing” are at the heart a lot of game music. All the major game audio engines out there (program like Wwise, FMOD Studio, Elias, CRI, Fabric) support those basic ideas along with various enhancements. That said, there are a lot of other things we try, and people are always coming up with new ideas and implementations, either extensions of horizontal and vertical resequencing or altogether new techniques.

For those hoping to work in the video game sound design industry, what advice would you have? Anything stand out in your career as instrumental to opening up opportunities?

If you’re specifically talking about sound design, then you should get well versed in the various game audio engines, and even game engines themselves. In a study a very large percentage of “game sound designer wanted” job postings (yes, many companies hire full-time employees for this), require knowledge of game audio tools such as Wwise and FMOD, and many also specifically list “scripting,” which is a type of simple computer programming. Properly placing sounds and music into games is actually quite technical, and companies are more and more expecting that their sound design team have the skillset to do it. Fortunately for sound designers (and composers), the most popular game audio tools are completely free to download and learn. It’s only if a specific game wants to use the technology does the company charge a fee, and then it is the game developer who pays the license.

In my own career, I was fortunate enough to have gotten specific skills (music composition and computer programming) just at a time when both of those were needed to make a career in game audio. So I would definitely say, based on both creative and technical needs of the current day, you can do your career a favor by making sure you have a good grasp of the tools, technologies and how they are used to address the creative and artistic challenges we have in games.

One word of caution, though. In games, we sometimes get so caught up in the tech, that it's easy to forget that, at the end of the day, it’s all about what comes out of the speakers. The very best tech won’t make up for mediocre sound design or poorly mixed music. A successful game sound designer will both push the technology and keep the creative/aesthetic bar high.

As a follow-up question, I thought your piece on how video game composers can get more gigs by venturing into sound design was excellent. To turn the question around, how can sound designers get more composing gigs?

I think that’s a bit harder route, mainly because most successful composers have spent years or decades mastering an instrument and/or practicing composing. So if you were, let’s say a tremendous Foley artist, but had never learned how to read music or play an instrument, you’d find it quite difficult to suddenly start composing.

That said, a lot of people who primarily sound designers have also worked in music, either as a composer outright or as a player. So first thing would be to get your composing/production chops up to snuff. Listen to game music that’s out there for the kinds of jobs you’re looking for. Interested in working for a company like Zynga on professionally produced mobile games? Listen to their music. Practice your production skills.

And then don’t be shy! I’ve been doing game music for 30 years and I still get nervous the first time I submit a piece to the game designer. You are probably a better composer than you think you are.

Also find your forte. I know one very in demand composer who is knowing for being able to great “cute” music, just the sort that keeps his dance card filled in mobile/casual games. And I know that “big orchestra” is not something where I can compete with the likes of Gordy Haab, Neal Acree or Lennie Moore. It’s a little humbling, but recognizing your own strengths and weaknesses can go a long way to sustaining a career in game music/sound.

Have you used iZotope’s Iris for game sound design?

I have Iris in my standard ambiences template. I’m a very visually oriented person, so I find the ability to shape the harmonic content of sound incredibly useful. I used it in a bed for a game I’m currently working on called “Mutant Football League.” One of the team’s stadium is in space, and I’ve gotten some great sci-fi/alien ambiences for it using Iris.

What other iZotope products have you used? RX?

I’m not sure that I know any game audio team that hasn’t used RX. I’ve received VO files from game developers that on first listen sounded utterly unusable, but contained some really good performances. RX, in the right hands, can work miracles on those.